Coprocessors: The Right Hand of Blockchains

Exploring Coprocessors: Their Role, Benefits, and Key Use Cases in AI, Gaming, and DeFi ⚙️

Today’s post is sponsored by Nim Network.

Nim Network is building the future of AI gaming and consumer applications in crypto, creating in its chain an ecosystem that connects ownership with the funding of open-source AI and applications.

Introduction

Blockchains have evolved significantly, becoming a core technology in various sectors such as financial services and supply chain management. By enhancing security, transparency, and efficiency in transactions, as well as enabling the creation of dApps and smart contracts, blockchains address major issues such as trust, fraud prevention, and data immutability.

Despite these advancements, many Layer 1 (L1) blockchains face scalability challenges. High demand often leads to:

Network congestion

Slower transaction times

Increased costs

Layer 2 (L2) solutions and sharding are working to mitigate these issues. However, as consumption grows, the need for faster and more efficient processing becomes crucial.

This is where “coprocessors” come in.

Coprocessors are specialized hardware designed to handle specific tasks more efficiently than general-purpose CPUs. They offer a promising solution by working alongside the main processor (L1 or L2 in this case) to execute demanding tasks such as cryptographic operations and complex calculations. This helps “offload” the load on the main chain, improving overall performance and throughput.

In today’s report, we will dive deeper into the ecosystem of coprocessors, exploring:

What coprocessors are 🔍

How they work ⚙️

Why they are needed 🚀

Use-cases and specific problems they help solve 🔧

Different players building coprocessors 🪛

The major focus will be on zero-knowledge (zk) coprocessors, as they are the most advanced ones currently available. Let’s dive in! 👇

What are coprocessors?

Coprocessors are specialized hardware designed to handle specific tasks alongside the main CPU, boosting efficiency and performance.

The concept of coprocessors originated in computer architecture to enhance the performance of traditional computers. Initially, computers relied solely on CPUs, but as tasks grew more complex, CPUs struggled to keep up. To address this, coprocessors like GPUs were introduced to handle specific tasks such as:

Graphics rendering 🎨

Encryption 🔒

Signal processing 📶

Scientific calculations 🔬

Examples of coprocessors include GPUs for graphics, cryptographic accelerators, and math coprocessors. By dividing tasks between the CPU and these specialized units, computers achieved significant performance improvements, enabling them to handle more sophisticated workloads efficiently.

In the context of blockchains, coprocessors help manage complex tasks off-chain, ensuring transparency and trust through verifiable computation. They utilize technologies such as zk-SNARKs, MPC (Multi-Party Computation), and TEE (Trusted Execution Environment) to enhance security and scalability.

Why are coprocessors needed?

Coprocessors provide several benefits, particularly for chains like Ethereum that face scalability issues. These benefits include:

Enhanced scalability 📈

Gasless transactions 💸

Multi-chain support 🔗

To understand this better, consider this analogy:

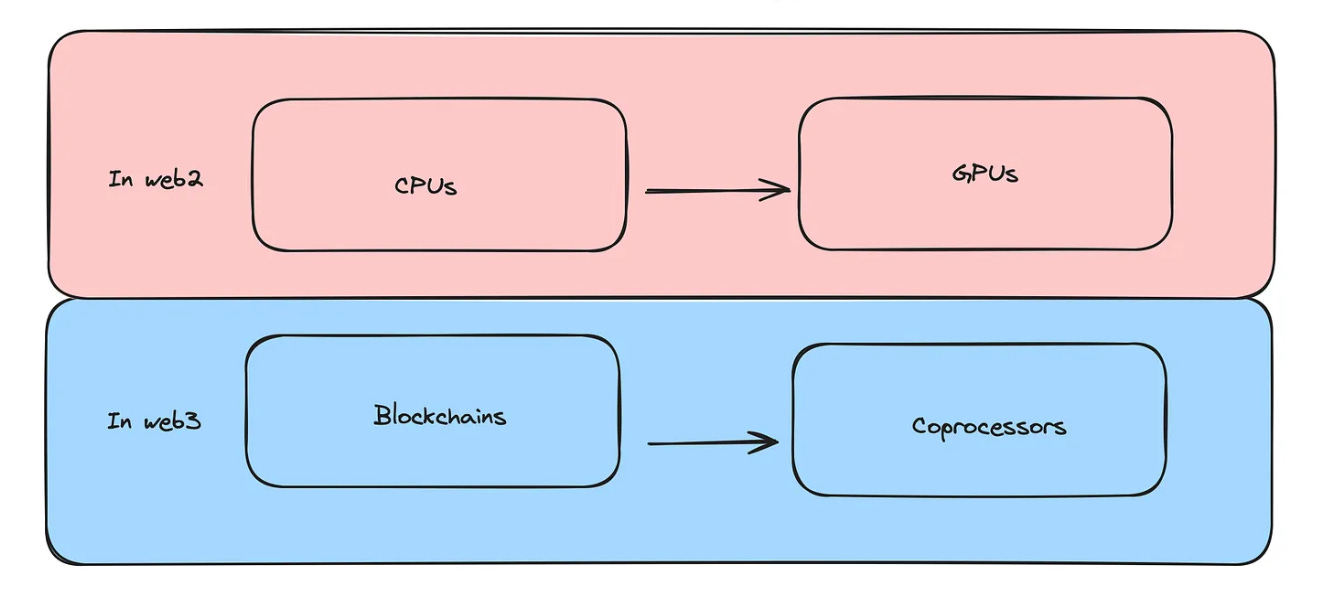

In web3, blockchains can be compared to CPUs in web2, while coprocessors can be compared to GPUs that handle heavy data and complex computational logic.

Why are coprocessors needed?

One significant issue in blockchains is the high cost of on-chain computation. While archival nodes store historical data, accessing this data is costly and complex for smart contracts. For example, the EVM can easily access recent block data but struggles with older data.

Blockchain machines focus on executing smart contract code securely, not handling large data or computation-heavy tasks. Thus, off-chain computation or scalability techniques are necessary.

Coprocessors provide a solution to these challenges by leveraging zk technology to enhance scalability. Here's how:

Efficient Large-Scale Computations: zk coprocessors handle large-scale computations while maintaining blockchain security.

Delegation of Historical Data Access: They allow smart contracts to delegate historical data access and computation off-chain using zk proofs, and then bringing the results on-chain.

Improved Scalability and Efficiency: This separation improves scalability and efficiency without compromising security.

zk coprocessors provide an efficient solution by handling large-scale computations while maintaining blockchain security.

They allow smart contracts to delegate historical data access and computation off-chain using zk proofs, bringing the results on-chain.

This separation improves scalability and efficiency without compromising security.

By adopting this new design, coprocessors can help enable applications to access more data and operate on a larger scale without having to pay high gas costs.

By adopting this new design, coprocessors enable applications to access more data and operate on a larger scale without incurring high gas costs.

So, how do these services work? Here’s a great infographic to understand it better.

Types of coprocessors & their comparison with Rollups

When comparing coprocessors with other technologies, it's essential to consider the security model and assurance level needed for computations.

ZK Coprocessors

ZK coprocessors are ideal for sensitive calculations requiring maximum security and minimal trust. They use zero-knowledge proofs to ensure verifiable results without relying on the operator. However, this comes at the cost of efficiency and flexibility.

Multi-Party Computation (MPC) and Trusted Hardware

For less sensitive tasks like analytics or risk modeling, MPC and trusted hardware can be more efficient options. These methods provide lesser security assurances but support a wider range of computations.

FHE-based Coprocessors

FHE-based coprocessors, like those developed by Fhenix in collaboration with EigenLayer, offer significant improvements in confidential computing. These coprocessors maintain data confidentiality while offloading computational tasks.

The choice between these technologies depends on the risk tolerance and specific needs of the application.

Coprocessors vs. Rollups

One more comparison that’s often drawn is between coprocessors and rollups.

Rollups

Rollups focus on increasing transaction throughput and reducing fees by aggregating transactions and maintaining state with the main chain. This makes them suitable for high-frequency trading.

Coprocessors

Coprocessors, on the other hand, handle complex logic and larger data volumes independently. They are ideal for advanced financial models and big data analysis across multiple blockchains and rollups.

Use-cases and applications

Coprocessors are highly modular in nature and can be leveraged for a wide variety of applications. Let’s explore some of the interesting use cases currently being built or that can be built:

Complex Calculations in DeFi Projects 💹

Coprocessors can handle complex calculations in DeFi projects, enabling sophisticated financial models and strategies that adapt in real-time. They offload heavy computations from the main chain, ensuring efficiency and scalability, which is crucial for optimizing trading strategies and high-frequency trading.

Fully On-Chain Games 🎮

Coprocessors can offload complex features from the EVM, enabling richer game mechanics and state updates. They can support advanced game logic and AI-driven features, creating more immersive and engaging gameplay comparable to Web2 games.

Perpetual Swaps and Options 📈

Coprocessors enable transparent and verifiable margining logic for decentralized trading, enhancing the reliability of derivatives platforms. They ensure privacy and trust while providing sophisticated trading strategies and risk management practices.

Data Enhancement for Smart Contracts 📜

Coprocessors can provide data capture, calculation, and verification services, enabling smart contracts to process large amounts of historical data. This facilitates more advanced business logic and operational efficiency, enhancing the reliability of smart contracts.

DAOs and Governance 🏛️

Coprocessors can offload heavy computations to reduce gas fees for DAO operations, streamlining governance processes and decision-making. This improves the efficiency and transparency of DAO operations, supporting community-driven projects.

ZKML 🔍

Coprocessors can enable on-chain machine learning applications with verifiable off-chain computation, using historical data for security and risk management. This integration opens up new possibilities for advanced analytics and intelligent decision-making in blockchain applications.

KYC (Know Your Customer) 🆔

Coprocessors can fetch off-chain data and create verifiable proofs for smart contracts, maintaining user privacy while ensuring compliance. This makes KYC processes more secure, private, and efficient in Web3.

Social and Identity Applications 🧑🤝🧑

Coprocessors can be used for proving digital identity and past actions without revealing wallet addresses, using zero-knowledge proofs. This enhances privacy and trust in social and identity applications, enabling secure proof of credentials and activities.

The applications are almost endless, thanks to the flexibility coprocessors provide. The above examples are some of the exciting ones, especially those where teams are already building innovative projects.

Who are building these coprocessors?

So, the next question is: who are the teams actually building these coprocessors?

Let’s learn about a few of them 👇

→ Axiom

Axiom is a ZK coprocessor for Ethereum that provides smart contracts with secure and verifiable access to all on-chain data. It uses zero-knowledge proofs to read data from block headers, states, transactions, and receipts, and performs computations like analytics and machine learning.

By generating ZK validity proofs for each query result, Axiom ensures the correctness of data fetching and computation, which are then verified on-chain. This trustless verification process allows for more reliable dApp development.

→ RISC Zero

RISC Zero focuses on the verifiable execution of computations for blockchain smart contracts. Developers can write programs in Rust and deploy them on the network, where zero-knowledge proofs ensure the correctness of each program's execution.

This includes components like Bonsai and zkVM. Bonsai integrates with the zkVM of the RISC-V instruction set architecture, providing high-performance proofs for general-purpose use-cases.

→ Brevis

Brevis is a ZK coprocessor that enables decentralized applications to access and compute data across multiple blockchains in a trustless manner. Its architecture includes:

zkFabric for synchronizing block headers

zkQueryNet for processing data queries

zkAggregatorRollup for verifying and submitting proofs to blockchains.

→ Lagrange

Lagrange is an interoperability zk coprocessor protocol that supports applications requiring large-scale data computation and cross-chain interoperability. Its core product, ZK Big Data, processes and verifies cross-chain data, generating ZK proofs through a highly parallel coprocessor.

Lagrange includes a verifiable database, dynamic updates, and SQL query capabilities from smart contracts. The protocol supports complex cross-chain applications and integrates with platforms like EigenLayer, Mantle, and LayerZero.

Coprocessors in AI

Coprocessors enhance crypto x AI applications by offloading complex computations, ensuring efficiency, security, and scalability across various tasks such as DeFi management, personalized assistants, and secure data processing. Here are some notable projects utilizing coprocessors for different use cases and technologies:

→ Phala Network

Phala Network integrates blockchain with Trusted Execution Environments (TEE) for secure AI interactions. Their Phat Contracts offload complex computations to Phala’s network using coprocessors, which is crucial for AI-driven DeFi management tasks like portfolio management and yield farming.

Phala's cross-chain interoperability enables AI agents to facilitate transactions across chains, while privacy-preserving computation secures sensitive data.

→ Ritual Network

Ritual is developing the first sovereign, community-owned AI network with Infernet, a decentralized oracle network (DON) that allows smart contracts to access AI models.

Ritual Network’s strategic partnerships highlight its modular nature:

EigenLayer: Utilizes a restaking mechanism to enhance economic security and protect against potential threats.

Celestia: Provides access to Celestia’s scalable data availability layer, improving data management efficiency and overall scalability.

→ Modulus Labs

Modulus Labs focuses on bringing complex machine learning algorithms directly on-chain using zk coprocessors. Their projects demonstrate the variety of applications possible:

Rockybot: An on-chain AI trading bot leveraging coprocessors for high-frequency trading operations.

Leela vs The World: An interactive AI game that uses coprocessors to handle in-game move tracking.

zkMon: Uses zero-knowledge proofs to authenticate AI-generated art.

→ Giza

Giza is a platform designed to streamline the creation, management, and hosting of verifiable machine learning models using zero-knowledge (ZK) proofs. It allows developers to convert any ML model into a verifiable model, ensuring tamper-evident proofs of ML executions.

Giza provides a control panel for AI engineers to monitor, schedule, and deploy AI actions with ease, integrating seamlessly with various cloud providers and ML libraries. The platform also supports protocol integration through EVM verifiers, enhancing efficiency, revenue growth, and adoption in decentralized applications.

→ EZKL

EZKL integrates zk-SNARKs with deep learning models and computational graphs, using familiar libraries like PyTorch or TensorFlow. It allows developers to export these models as ONNX files and generate zk-SNARK circuits, ensuring privacy and security by proving statements about computations without revealing the underlying data.

These proofs can be verified on-chain, in browsers, or on devices. EZKL supports various applications, including financial models, gaming, and data attestation, and offers tools for Python, JavaScript, and command-line interfaces to simplify off-chain computation while maintaining security.

Future of Coprocessors

Overall, coprocessors are very crucial to the blockchain ecosystem. I see them as the "steroids" for these chains to operate faster and in a more secure manner.

They will be crucial for a variety of applications including:

Developing trustless and censorship-resistant AI applications

Enabling verifiable analysis of large data sets

Improving the reliability and transparency of AI-driven applications in crypto

Allowing smart contracts to access more data and off-chain computing resources at lower costs without compromising decentralization

The potential applications of coprocessors could revolutionize sectors such as decentralized finance (DeFi), where they could help maintain the competitiveness of platforms like Sushiswap and Uniswap.

However, like any technology, coprocessors come with their own set of challenges, such as development complexity and high hardware costs.

Despite these challenges, there is ongoing effort to address these issues. For instance, the partnership between Fhenix and EigenLayer exemplifies efforts to enhance computational tasks and accelerate confidential on-chain transactions. Such collaborations are crucial for overcoming existing obstacles and unlocking the full potential of coprocessors in the space.

Closing thoughts

The coprocessor ecosystem is evolving rapidly, with a diverse range of players contributing to both general-purpose solutions and specialized applications, such as those from Phala and Ritual tailored for the AI sector.

As this technology continues to evolve, we anticipate the emergence of new use cases and innovative applications. The future of coprocessors looks bright, and we are excited to observe how this space develops and transforms.

We hope you found this report informative and engaging. Thank you for reading!